Artificial Intelligence is quickly becoming an important ingredient for IoT projects’ success, a requirement for unlocking its full potential and providing a competitive edge to those that embrace it. AI naturally integrates into existing connected sensor/actuator networks and immediately adds measurable value. It was hard to imagine until recently, that AI will be so easily accessible, with just a few libraries installed we can take benefit of this amazing technology.

Let me illustrate with a simple example – enhancing ordinary IP security cameras with AI. The goal is that the cameras will recognize the objects they see and publish the recognized object’s data to an MQTT topic. I am interested in the detected object’s type, location within the captured frame and recognition confidence level. The applications I am considering are:

- Object following via PTZ (keeping the object of interest e.g. human in the middle of the frame)

- PTZ camera blind spot avoidance with opposing cameras providing each other that object of interest is beyond their current viewing angle

- Smart movement detection i.e. only trigger alarm event if certain object types are detected

- Monitor our presence at home vs detected object of risky type, i.e. I don’t want to see humans wandering the yard while we are at work, or during nights while the security system is armed

- Providing the object’s data over MQTT so other IoT nodes can make decisions/trigger actions

The project relies on image classification with deep convolutional neural networks using the darkflow library. Setting up darkflow is pretty trivial, simply follow the instructions in the repository. I used Python, basically, it starts an FFmpeg background process that captures a single frame from the RTSP stream every 5 seconds and passes it to darkflow for analysis. The analysis results are then published to an MQTT topic, and as an option, a screenshot of the detected objects is taken.

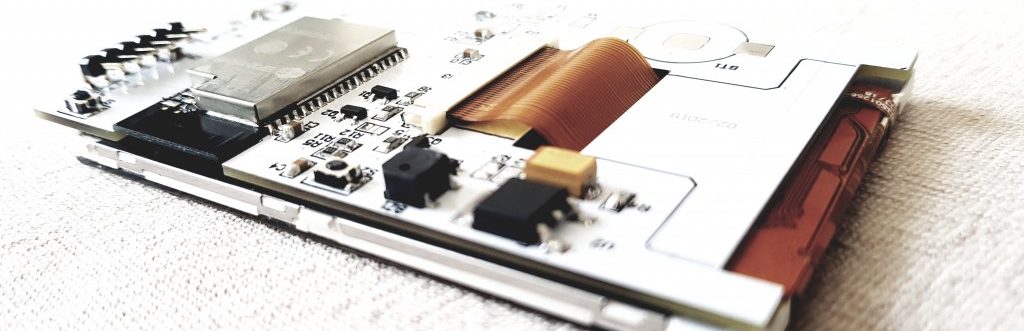

The reason why I used FFmpeg rather than OpenCV to capture the RTSP frames is that OpenCV implements RTSP over TCP by default. My specific camera model only provides RTSP over UDP. I tried to recompile with UDP support, but could not get it to work reliably. I use a RAM drive to avoid excessive writes on my SSD. Ideally, this setup will run on a Raspberry Pi 3 B+, I have one in order.

My code is available here for you to try out.

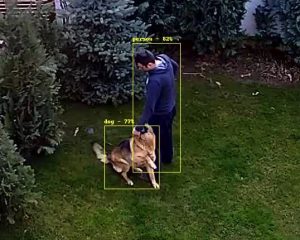

Couple screenshots, note how the detected objects are outlined, labeled and confidence level visualised:

- At night, still works well; car and person detected

- Me and my dog detected

Which camera model are you using? (I see it has day/night mode)

Sricam SP008 1.3M Wireless IP Camera 960P SD Card 128G 5X Optical Zoom IR Onvif IP Camera PTZ Outdoor Waterproof PTZ IPC

http://s.aliexpress.com/Eb6nEV7J

Hi Martin, great project ! A couple of questions if I may ?

1. How would use the RasPi camera locally ?

2. In your python script I notice the yolo.weights file is stored off-box. Why is that ?

3. Is all processing done by the raspi ? or is the raspi just grabbing the images and handing them to a much larger box for actual processing ?

Thanks

Gah sorry I’ll reply to my own post, I now understand this is running on a Mac, and the yolo.weights is just located elsewhere in the file-system so that answers 2&3 and for 1. I can use the pi-camera API (https://picamera.readthedocs.io/en/latest/recipes1.html#capturing-timelapse-sequences) and a thread to capture images (a’la http://sebastiandahlgren.se/2014/06/27/running-a-method-as-a-background-thread-in-python/)

I’ll see how i go…

You figured it 🙂 Just feed the script with images and the magic happens. I just received my RPi 3B+ today and will test if it will work well ..

Awesome project! I’m a cctv/access control/voice/data/alarm(low voltage) field tech. Have you thought of adding cloud recording ability via another dedicated remote RPi0 or a cloud service such as Dropbox?

I will definitely be keeping track on this project.

Thanks again!

Yes,running this on the cloud was the original plan, probably it will mean some traffic overhead, and some security concerns.

Why 1 frame every 5 seconds? Would this not greatly limit it’s detection ability? A quick moving car or person, would likely be able to zip past the camera’s field of view, before it cycles for another picture?

You can pick any value as long as your computer can handle it.. running this on GPU Titan X can enable real time processing, however for a Raspberry Pi a maximum of a frame every 3 seconds is possible..at least kor my setup

Ok, that makes sense! Thanks!

Haven’t you concern to use Intel Neural Compute Stick as an accelerator?

No, but that looks quite an interesting option. Thanks for the tip!

When you say follow the instructions in the repository you mean the readme file for darkflow? It was not so trivial for me. I guess I don’t quite pass the knowledge required what is really going on there.

Where did you get stuck? It was probably oversimplified statement from my side, but it isn’t that hard to install it

I might use your help here if you don’t mind. I have a Raspberry PI 3 with more-or-less standard raspbian image with node-red I am using for “home automation”. My Linux understanding is fairly basic, I am a low level user mostly playing in node-red. Let me know if my understanding is correct:

First I need to install Darknet: I just follow the steps here: https://pjreddie.com/darknet/install/. Should I pick OpenCV or CUDA? I can’t pick which would be better in this case.

Next I install Yolo using the steps here: https://pjreddie.com/darknet/yolo/

Next I download darkflow: I just download this to my /home/pi/darkflow folder? I also can’t really tell which compile option to use? Just use the inplace option?

At this point I assume I can download one of the pre-trained files weights and use your python example to process IP camera images. That should be all, right?

You only need darkflow compiled in place and the weights from darknet/yolo. You will need OpenCV installed on your raspberry pi.

I installed openCV. Downloaded the darkflow to my .flow folder and tried to compile it. I got the following message:

File “setup.py”, line 3, in

from Cython.Build import cythonize

ImportError: No module named ‘Cython’

I installed Cython (pip install –user Cython). Installation was successful, but the same message on the darkflow build persists.

I guess it is due to the virtualenv you installed it. You have more reading to do 🙂

Hello, I’m trying your project using RPI 3B and IP Cam, but I got an error in python..

Traceback (most recent call last):

File “/home/pi/IPcam/AI-ipcam-master/ai-ipcam.py”, line 101, in

time.sleep(1)

I can control PTZ using python and I also created a ramdisk on RPI.

I think my cam frame is not ready, but I don’t know the reason..

I’d appreciate it if you can tell me how to solve it.

Thanks!

Hi,

that error message doesn’t give out much what the issue is. I suggest printing out more debug info to help narrow the issue. You can easily spot, for example, if the screengrabs are being stored into the temp ramdrive.

Greetings, I am trying to get this up and running on my pi now but I am getting the error

Traceback (most recent call last):

File “”, line 1, in

ImportError: cannot import name ‘TFNet’

Are you actually using “tensorflow1.0” as described in the requirements? the earliest version I can find on the tensorflow repository is 1.7 and the current version is 1.9.

Thanks, can’t wait to get this working!

I figured this problem out, I was trying to import darkflow from outside of the main folder. I moved my script in to that folder and it started working much better.

Great! I wasn’t sure what the issue is, good that you got it.

Hello Martin, I was pleasantly suprised that someone has already worked on what i was planning to start on.

I have (successfully) installed all the dependencies required to run your project, however i am getting an “Illegal Operation” message after calling “python ai-ipcam.py”. I have pointed it to a valid RTSP URL for my cameras. This is the same one configured in my NVR with the credentials included in the url.

Things i did to install deps and run your project :

– cloned the dark flow repo : https://github.com/thtrieu/darkflow

– pip install all the deps for dark flow ( numpy, tensor flow, cython)

– change the default rtsp stream in the .py file because im lazy

– run your program with ” python ai-ipcam.py”

– redo everything in pip3 & python3 as i read dark flow requires python3. This includes running your file with python3.

Is this something that might have to do with this project being 6 months old and certain instructions being deprecated, or am i doing something wrong?